Gantarctica

A year and a half or so ago, considering how poor I am at updating the Lynch Lab’s Instagram (@thelynchlab), I started tooling around with the idea of using a GAN - or Generative Adversarial Network - to just make fake pictures of Antarctica that could be fed into the account. It seemed like a perfect outgrowth of a lot of the work we do, combining Antarctic fieldwork and ecology with high-performance computing and artificial intelligence.

GANs are pretty neat and a little terrifying. A few years ago, NVIDIA (the company that makes graphics cards) did a project in which they used a GAN to generate headshots of fake celebrities. Basically they fed a bunch of pictures of real celebrities into an AI model, which figured out how to make faces that an average person could easily believe were real celebrities.

The process works more or less like this: A generator makes fake data. To start, it doesn’t really know how to fake whatever you’re trying to make. But it’s trying to fool a discriminator. So every time it makes some fake data - in the previous case a fake celebrity face - the discriminator takes a look and has to decide whether it’s real or fake, based on all the data it’s seen (real celebrity photos). Both generator and discriminator learn from this process, but the eventual outcome is that the generator gets so good it can’t really tell what’s real and what’s not. It’s often compared to an art forger and a cop, but this is an imperfect analogy.

In my case, I have a whole library of images of Antarctica that I’ve taken in my 8 years in the field. Gazillions of pictures of penguins, of seals, of people, and of land- and seascapes. It seemed like it shouldn’t be a hard problem, especially since, to my eye, Antarctic landscapes are fairly simple. There’s a much more limited color palette than you’d find in a lot of places, with a lot of white, grey, and a limited selection of blues. Even the sunsets take on a particular yellowish cast.

But for someone like me, with a working understanding of AI but whose skills primarily lie broadly across other disciplines, building up a GAN is not a task for an afternoon. And, with a variety of more pressing projects, it ended up on the back burner.

Recently I came across RunwayML, a web app that is a front end for a pretty expansive number of machine learning architectures. It has StyleGAN - the GAN used for celebrity faces - built in and a really easy interface for uploading training data, training a model, and generating images.

The trick for many image-based machine learning applications is to provide good training data, and to start I decided to take an expansive view. I have a lot of Antarctic images that fall into the landscape category.

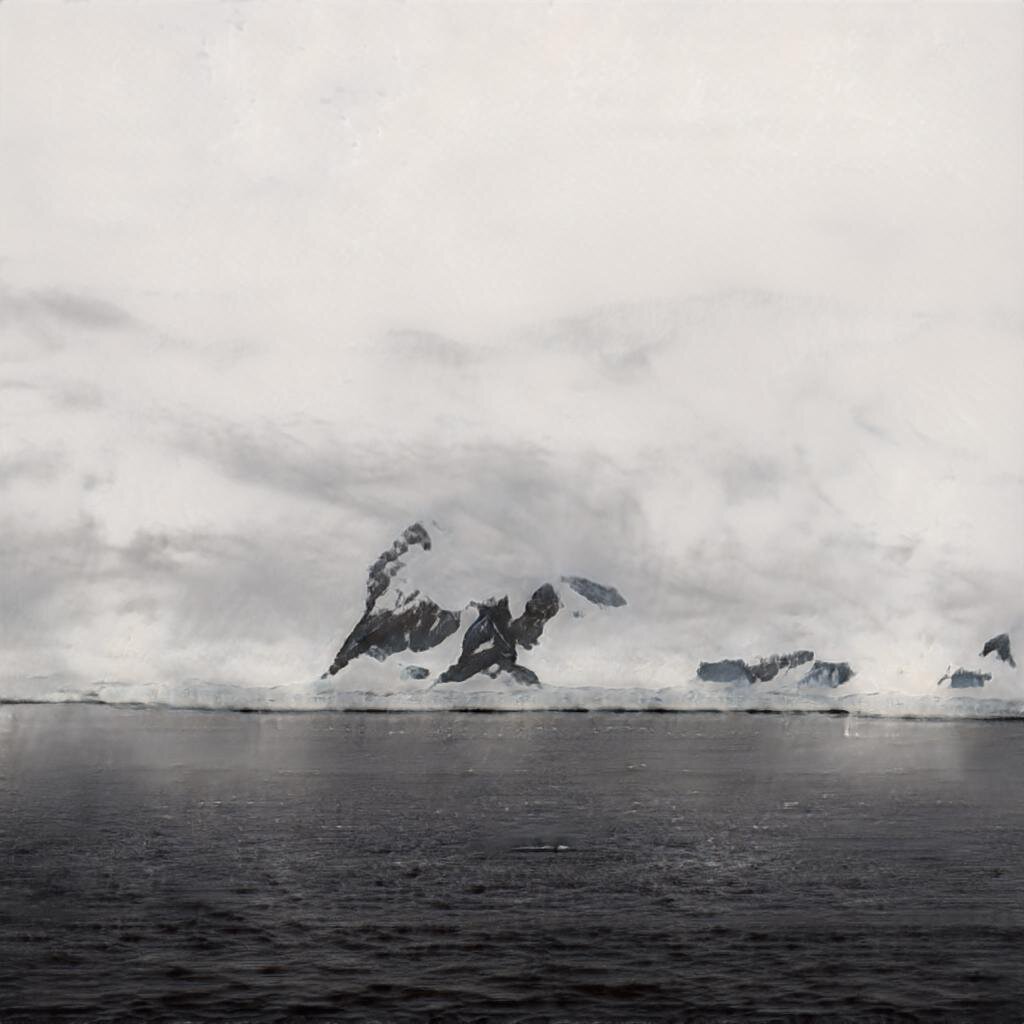

PHOTO

So I gathered the images that were explicitly of landscapes along with images of icebergs, of penguins in front of landscapes, of people in landscapes and added them into the training set. Then I pulled in images I’d sourced for another project (grabbed from Google Images and Bing) and photos from other lab members. In it all went. The model expects square images and Runway provides a pre-processing step to crop. There are a few options - you can individually crop, do a center crop, take a random crop, or leave uncropped (in which case they will be squeezed into a square shape). I decided to let it do a random crop, since it was late and I didn’t feel like spending a couple of hours cropping what was now a 750-image training set.

I set up the training process to train for 3000 steps, the default, and let it work.

3000 steps of training - the images get a little more Antarctica-like

The outcome was underwhelming. The images definitely had the feel of Antarctica, but were really poor quality. Plus there were some pretty big problems. It was clear that it had picked up on the people, penguins, and ships present in some of the training data to think that it was okay to put strange, dark shapes in the images. One had a strange wizard-like shape in it. Several had black blobs that might have been inspired by penguins.

Is it a penguin?

I had a couple of options here. I could continue training the model - it obviously needed it since many of the textures were very rough - and hope that it overcame the biggest problems that were there (i.e. that it would give up on penguins and wizards). Or I could start over and more carefully craft the training set. I chose the latter. I pulled out all the pictures in which the landscape was not the main focus (like pictures of penguins with a landscape in the background), plus all the photos that were only of icebergs, photos with ships, photos of people, photos where the horizon wasn’t visible, and ended up with a good set of images basically all showing just mountains, islands, ice, water, and snow.

But my worry was that the training set was too small now. More training data is always better and I was worried it wouldn’t be able to learn textures well if there weren’t enough examples of all the ways that rock or ice can look. So I headed out to the internet.

Using a web-crawler script, I crawled Flickr for all the images tagged “Antarctica” from a variety of areas frequented by tourists. It was a rough pass and I certainly didn’t get all of them, but I managed to pull over a thousand extra images down. Of course with a search that broad, many of those images had the same problems as the original set, so I removed all the pictures that didn’t meet the strict landscape criteria - or which couldn’t be cropped so that they met them.

Then the crop. I worried that part of my problem was the random crop - if I want the GAN to make landscape images, I have to show it only images that are good landscapes. If some of the random crops were only of the sky or water, that would be part of what the GAN would learn. So I manually cropped each of the 1120 images in the training set, choosing a square crop that contained the elements of a decent landscape photograph. Then back to training.

I chose the same 3000 steps so I could monitor the process. This first pass yielded better images - no strange dark blobs - but still had texture issues.

The unifying feature in my mind is improbable geology. There are images of mountains apparently suspended in the air, or large rock formations hanging off of cliffs with seemingly no concern for gravity. More work to be done. The color palette clearly had been ironed out - there are now greens or reds in the images - and the sky in most images contains fairly convincing clouds. Water is challenging - lots of repeated texture or very coarse and unconvincing wave patterns. I continued training for another 1000 steps.

At 4000 steps there’s clear improvement. In small form, there are a numbr of reasonably convincing images. There are some wildly unconvincing ones however, with the same issues of mountains suspended in the air, and strange leaning rocks like before. The water texture has improved quite a bit, but the texture of beaches is still very difficult. I think one of the challenges here is that he cloud ceiling is often quite low in Antarctica, which means that mountains often are swallowed by cloud - cloud that is a somewhat similar color and texture to snow. And sometimes the peaks of those mountains poke up through the clouds again, so it’s understandable that features somewhat like that would pass into the generated images. Still, it can clearly improve.

Onward to 5000 steps.

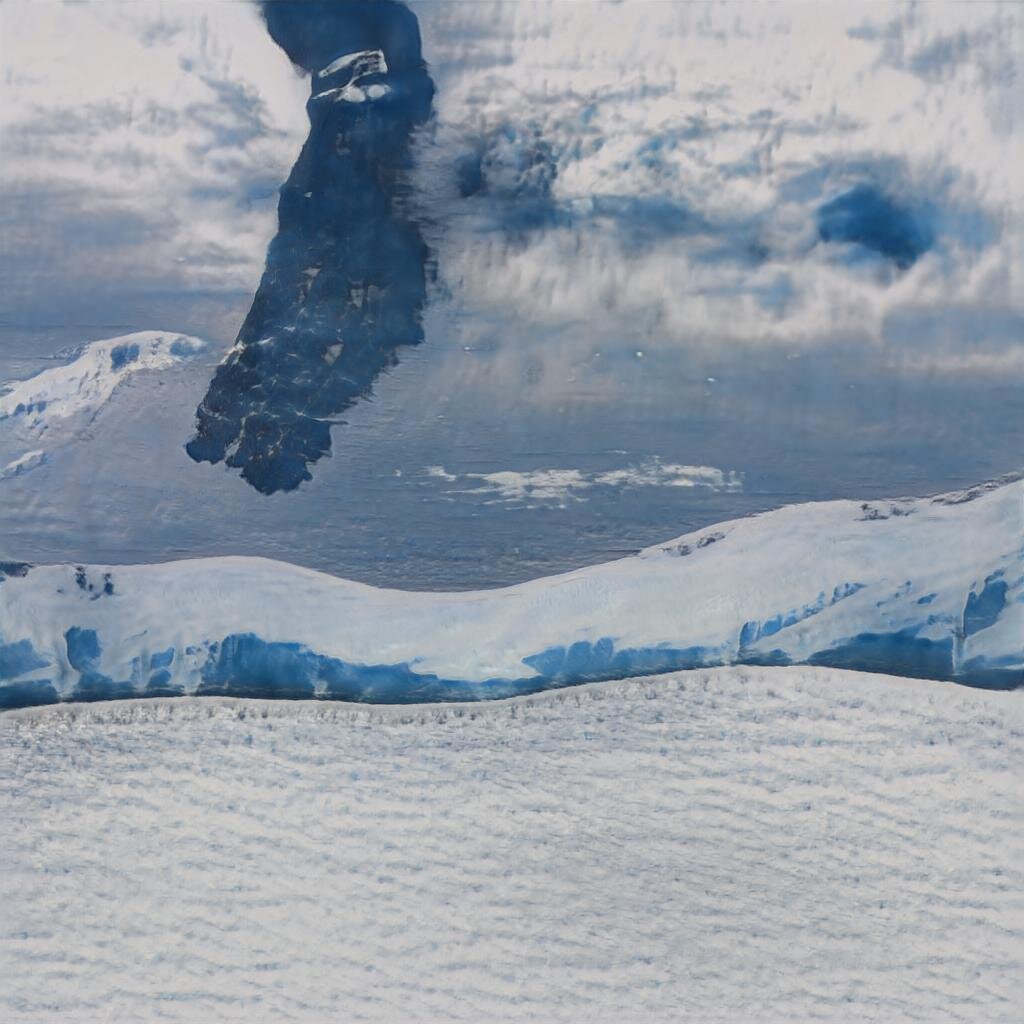

With 5000 steps we’re getting some quite convincing images. Certainly in small I could believe them. There are some clearly noticeable issue though. Water textures are much better but are still unconvincing in some cases. Most noticeable (now that the water makes some sense) are the reflections. This is the kind of thing that stands out very clearly to a human eye, but is less obvious to a computer. Notice how the reflections don’t really mirror the image perfectly (and some times not at all). There remain a few images that are wildly improbable, such as one (shown above) in which the sea seems to part and flow up the face of a cliff.

Onward to 6000 steps.

At 6000, the same problems exist but they’re less bad. Water is improving. The structure of mountains is very very believable. Icebergs though… The model is clearly a little confused about how icebergs can have strange shapes but mountains should not. I’m not confident it will learn that one before we start overfitting. I’m pretty happy with this one but think there’s some work left. Onward to 7000

I did not expect 7000 steps to be almost universally worse. Of the 50 images I generated, most were nonsensical.

For the next pass, I’ll add 2000 steps to get us to 9000 and see what’s become of it.